I’ve been piloting the use of GPT4 for work and personal projects lately. I am something of an AI Skeptic, but GPT4 (in particular) has surprised me with its ability to perform sort of complicated tasks and write code. So much of programming is doing a sequence of rote operations on data of some kind (especially true of the sort of code written by data scientists or in an ETL) and it is pretty feasible to just instruct GPT4 to write such code for you. I am fluent in a variety of programming languages, but, like most folks, rely on reference material. GPT4 allows me to skip that cumbersome step of looking up a function name, reading its documentation, figuring out how to prepare the data for it, etc. It is much like a compact reference which can glue things together. It has made me more productive, even if I have to frequently correct things manually.

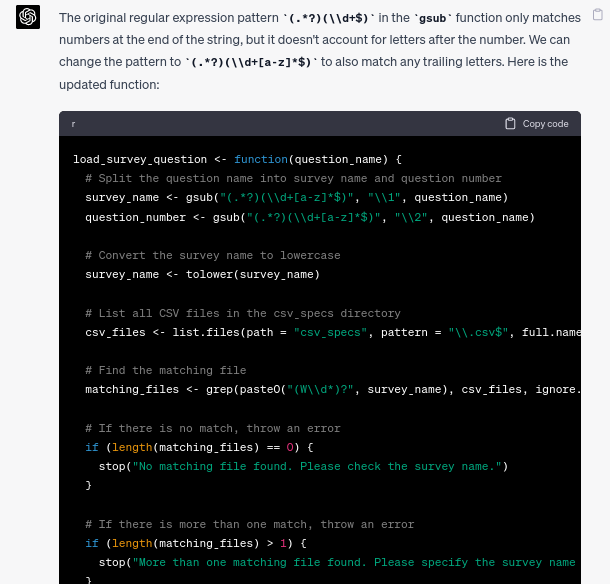

Another aspect of developing integrated with GPT is that it is conversational – you can simply ask it to refine a solution it has provided by describing either the conceptual mistake it has made or a new element you want added and by iterating a few times.

I think partly because human beings are social animals (even us non-neurotypicals) this conversational mode of development feels very productive and natural.But it is also insanely wasteful. Running these models is computer (and therefor energy intensive). There may be an argument for discounting the training costs, since it is (mostly) paid up front and the resulting model can be used afterward with only minor refinements. But the above chance would have been both faster and much less energetically expensive had I simply modified the regex myself.

Part of the problem is that GPT4 is incredibly prolix. For every question you pose it typically returns an entire rewrite of the code in question along with (typically supererogatory) explanation. This is a service literally billed by the token, which suggests that tokens are at least not “too cheap to meter.”

It is dismal to think about the fact that before GPT my energy expenditure as a developer was typically not much more than that of my laptop, my body, and the accoutrements of modern life (lighting, air conditioning, etc). I wouldn’t be surprised to find that ChatGPT has ballooned that number non-trivially.

I don’t think LLMs are a direct threat to jobs yet. Even GPT routinely reminds that it is exactly what it is: a large model meant to predict the next token in a sequence of tokens. But if it were, I’m not sure its wastefulness would be enough to prevent it from supplanting human workers. Even if we are cheap by watt, we are hard to manage and have outrageous demands like wanting half of our waking life for work not controlled by capital. Like almost all technology, it will eventually be put into the service of power. Not looking forward to it.